| Su | Mo | Tu | We | Th | Fr | Sa |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 |

If you are ever in need of just the audio portion of an .mp4 file, here are two commands and a loop to put the audio into an .mp3 file.

#!/bin/sh for file in *.mp4 do echo $file "/cygdrive/c/Program Files/MPlayer/mplayer.exe" -ao pcm $file \ -ao pcm:file=tmp.wav lame -h tmp.wav `basename $file .mp4`.mp3 done

(You might need to customize the path to mplayer.exe. I used an explicit path as I was on Windows and needed to pull in a specific cygwin dll for this executable. You may or may not have this issue.)

Have you ever wanted to merge several PDF files to create a single PDF file? Well, if you work with electronic documents you probably will. There is a very capable command line tool to carry out this and a range of other PDF related operations, it is called pdftk. You can obtain a copy of pdftk here: http://www.accesspdf.com/pdftk and if you are interested in merging, or taking apart PDF documents, this is the tool that you need.

For example, if you want to concatenate three PDFs into a single PDF file, here is the command:

pdftk A=a.pdf B=b.pdf C=c.pdf output output.pdf

I use pdftk on Linux and Windows via Cygwin on a regular basis.

Wow, I needed to open a PDF file today. This particular file was password protected years ago and, of course, the password had been forgotten. 'Well, while I am thinking, I might as well try a quick Google on the subject of lost PDF passwords'. This rapidly took me to the Source Forge page for pdfcrack, and just as rapidly I had downloaded and built pdfcrack on Cygwin. So far so good, no surprises. However, the real shock was in how fast 'pdfcrack' determined the password. It took just a few seconds - I hadn't even started to read the instructions and I had the password.

Pdfcrack was fast because the password was only 4 characters long, and pdfcrack was able to work through trial passwords rapidly (about 36,000 password attempts per second on this particular machine, a simple, slowww, laptop).

So, be warned, passwords for PDF files need to be long to turn 'a few seconds' into 'a few days' and make PDF files secure; or their passwords unrecoverable.

Say 36,000 words per second is the standard speed for pdfcrack. How many letters (or characters) do you need in your password to make a PDF file generally safe against a brute force attack for 24 hours? Well, there are 24x60x60=86,400 seconds in 24 hours, and in this time pdfcrack can try 36,000x86,400=3,110,400,000 passwords (over three billion passwords). Say there are 60 characters that can be used in each position of the password, you will need at least a 6 character password, for reasonable PDF file security. (Because, 60^5 < 3,110,400,000 but 60^6 > 3,110,400,000).

Assuming, of course, that your password is not something that can be found in a dictionary. Most password recovery programs, like pdfcrack, can make use of a supplied dictionary of common passwords. So you absolutely need to avoid common words in passwords to ensure security, even if they are longer than 6 characters.

Recently, I have been making use of a third generation, 15 GB, iPod. Accordingly the problem of filling the iPod with .mp3 files emerges - and the standard solution for this is iTunes. Although many people rave about iTunes - I found it a very confusing program - I was never sure what was going to happen when a 'synchronization' occurred. I don't have much local disk space on the machine that could run iTunes and as iTunes keeps a complete copy of what is on your iPod - this seemed to be an inefficient consumer of space (after all, the CDs themselves form an efficient backup of what is stored on your iPod). Additionally iTunes is a little too obsessed with its online big brother(s) at Apple in the form of adverts and update information. So, what do you do if you don't want to use iTunes? Firstly, use cd-paranoia to extract .wav files from your CDs. This is a very simple operation:

cd-paranoia -B

Then convert the .wav files to .mp3 using lame. The command for this is simply:

lame -h input.wav output.mp3

Wrapping the lame command in a simple for loop makes the process painless. The resulting .mp3 files do not possess ID3 tags - but you can rapidly add them with another loop, using the mp3info program, for example:

for FILE in *.mp3 do echo $FILE mp3info -t `basename $FILE .mp3` -l AlbumTitle -a "Artist A" $FILE done

Once you have converted the CDs to .mp3 format, you can copy them to your iPod, and delete them from your hard drive. You will need to convince the iPod to allow you to play the tunes - without using iTunes - there are several programs that accomplish this by building the necessary description files for the on iPod software, e.g. retune and Floola.

A while back I found myself wanting to make a permanent copy of a talk given on the web using an rstp address. You might want to do this to be able to share the talk with a friend or colleague - or to view later offline from the web. Here is how this objective can be achieved. The first step is discover the rstp address of the file. This is a little painful - but should always be possible. What worked for me was to launch the talk in a browser (which on windows fired up the Real Player embedded in the browser). This gave me an option to launch the Real Player browser, and this in turn gave me the option to share the presentation with a friend using email. I took this option - and finally ended up with an email message which revealed the rstp://... information required to capture the file. This looks like an http:// address - but to grab the file you need a program that can talk rstp:// - so wget etc. cannot be used. MPlayer does have the requisite functionality though, here is the command line to capture the file:

mplayer -noframedrop -dumpfile example.rm \ -dumpstream rtsp://"long complicated address/filename.rm"

Then you need to convert the captured example.rm file to wav format:

mplayer -ao pcm example.rm

And initially mplayer failed with 'Cannot find codec for audio format 0x72706973' for me - that was a little tedious - but it was cured by returning to the official Mplayer [6]site and just putting the sipr*.dll (from the codecs zip file) in the Mplayer directory. So finally:

mplayer -ao pcm example.rm

...created 'audiodump.wav' which lame was able to turn into a mp3 file as required, and:

lame -h audiodump.wav example.mp3

Creates an mp3 file. So, it takes a few steps - but eventually you get the offline usable mp3 file.

Further notes on this topic:

Again I faced the question of off-line mp3 listening. Specifically, how to listen to a online news item from NPR - when I am constantly interrupted when I am online and would prefer something that I can listen to on my mp3 player or ipod shuffle. As the [5]rstp post indicates the main problem is determining the rstp address of the media source. Here is how to determine this for the NPR site.

1) Start up a browser listening to the interview or show that you would like to listen to off-line. (I used Firefox). This will spawn a 'player' browser window with the embedded control for the Windows Media Play or Real player within it.

2) Use the 'Launch Standalone Player' to fire up the Real player in isolation (switch to Real player if necessary)

3) This has the effect of downloading a 'rpm' file to your desktop - this file contains a url like this:

http://www.npr.org/templates/dmg/dmg_em.p....

4) Actually, this string is (deliberately?) very long and complex - and you need to turn this into an rstp address. Do this using

wget -O output.txt "http://www.npr.org/templates/dmg/dmg_em.p...."

I did this by just editing the rpm file, then typing:

source filename.rpm

Note that some additional quotes needed to be added to the http address.

5) This wget operation is fast - and you will find in the output.txt file an rstp address that can be fed into the instructions for capturing an [6]rstp stream as an mp3 file.

Once you have determined the necessary steps for a given site, you can script them, and if appropriate have them happen using a crontab on an automated basis.

Say you want to reduce the size of a talk show mp3 file to maximize the number of mp3 files that you can load on your player. How do you do that? Here is a 'lame' command:

lame -b 40 -m m --resample 22.05 -S KentBeck_Large.mp3 KentBeck.mp3

This reduces the bit rate to 40 kbps (-b 40), sets the mode to mono (-m m), resamples at a frequency of 22.05 kHz (--resample 22.05), and doesn't print anything to the screen (-S). For the 36 megabyte file in this example - this saves 13.75 megabytes - and does not appreciably change the intelligibility of the recording.

A while back, I found that a trusted 1 GB USB drive was exhibiting apparent corruption. Some of the directories gave listings in which the file names were garbled. Fortunately the corrupted files in these directories weren't mission critical. However, I became concerned that valuable files that were on the root of USB drive might disappear too. I was on the road - so my back up options were limited. However, the following commands rapidly salvaged, compressed, encrypted, and stored on gmail what was left on my drive. These commands might be useful to you, if you find yourself in similar circumstances. And, if you know what causes USB directory listing corruption, and how to avoid it, and/or recover from it, please let me know!

# Commands to extract data from a USB drive and copy to gmail for storage # Firstly, copy what you can from your USB drive to a directory # called (in this case) 'save' mkdir save cd save cp -r e:/stuff . # create a tar file of that saved directory cd .. tar -cvzf save.tgz save # encrypt the tgz file ccrypt -e < save.tgz > save.tgz.backup # split the tgz file into ~ 10 MB chunks split -b10000000 save.tgz.backup # now mail the chunks to your gmail account for file in x* do echo $file echo $file | mutt -a $file -s $file youremailaddress@gmail.com done

And to reassemble the original files you proceed as follows:

# download the gmail attachments, then cat x* > temp.tgz.backup # then decrypt them with: ccrypt -d < temp.tgz.backup > temp.tgz # and finally un-tar the data tar xvof ./temp.tgz

If you download a flash movie from YouTube or Google videos - often it is convenient to convert it to mpeg format. On Linux and Windows (under Cygwin) this can be achieved using ffmpeg, for example:

ffmpeg -i input.flv -ab 56 -ar 22050 -b 500 -s 320x240 output.mpg

And if you want another format, simply change the file extension on your output file. So for mp4, for example, the command would be:

ffmpeg -i input.flv -ab 56 -ar 22050 -b 500 -s 320x240 output.mp4

Sometimes it is useful to extract an mp3 file from an mpeg or mpg file. This is a task that is handled efficiently using ffmpeg and lame. These tools are available for Linux and for Windows via Cygwin.

Here is an example:

ffmpeg -i example.mpg example.wav lame -h example.wav example.mp3

Almost all DVD players can also play VCD format disks. VCD format disks are made with normal CD media and so are less expensive than DVD disks. VCD cannot store as much information as DVD (which translates to shorter movie lengths). But if you are making DVDs which do not run for hours - you can probably use VCD instead of DVD and save money. Here are the commands necessary to create a VCD disk from a flash (flv) file. We will take the example flash file 'example.flv'. First you need to convert your flash file to mpg, and at the same time we will also use ffmpeg to convert the flash file to the standard required by VCD (using the -target ntsc-vcd option). I found that it was also necessary to make sure that there were two audio channels (the -ac 2 option).

ffmpeg.exe -i example.flv -target ntsc-vcd -ac 2 temp.mpg

The next step is to create the VCD image. The tool to use for this is "vcdimager". The following command line takes the temp.mpg file and converts it to a disk image/cue file pair that can be used to burn the VCD disk.

vcdimager -t vcd2 -l "Example" -c vcd.cue -b vcd.bin temp.mpg

(If you have multiple mpg files to add to the disk just replace 'temp.mpg' with 'temp1.mpg temp2.mpg temp3.mpg' etc.) For the burn operation, use cdrdao. Here is the command line that I used on Cygwin on Windows XP.

cdrdao write --device 1,0,0 --driver generic-mmc-raw --force --speed 4 vcd.cue

Getting cdrdao working on Cygwin took a minor amount of effort - I will post a note on how to do that in the near future. On Linux you should have no problems whatsoever. If you follow these directions, you'll be able to save and share VCDs of anything that starts life as a flash movie file (flv) - or any other format that ffmpeg can deal with.

I thought I would record the following experience in case it helps others who encounter the same problem. I have an old Dell Latitude D830 laptop and generally leave it in standby mode at the end of the day.

Standby mode uses little power and makes the slow Windows XP reboot unnecessary. However, last week I was away from the office and the machine in standby mode, and detached from the power supply, apparently ran out of battery power. When I came to power up the machine, rather than springing back from standby mode, it began a normal reboot sequence. However, this reboot sequence was far from normal in its ending. After the Windows XP logo appeared with its blue progress bar, the screen suddenly went completely black.

Very strange and unsatisfactory behavior! Googling through the various sites discussing this topic, I quickly came to appreciate that there are a multitude of ways in which this tedious effect can be achieved. Rebooting in safe mode or VGA mode worked fine, but any attempt to get to the normal screen resolution resulted in the blank screen after the Windows XP logo part of the reboot sequence.

To solve the problem I tried rebooting in safe mode and reloading graphics drivers as originally downloaded from Dell. This did not solve the problem. I then tried just using the machine with remote desktop, after booting it into VGA mode. This worked well, but wasn't quite what I was used to, and certainly did not constitute a fix, and so I Googled further. I tried 'repairing' the operating system using a Windows XP disk. (That was a mistake - see below). Eventually, I ran into a site which described how someone logged onto the machine, although the screen was black. So - having nothing to lose, I tried that. I typed username - and tab - and password a few times. Something in the blankness was happening as their were some typical Windows XP clonking sounds emitted from the machine. Then I must have hit the correct sequence, as the display suddenly lit up, and carried on working as though nothing had ever happened.

Of course, the Windows XP repair attempt had the effect of removing all the vital operating system updates that had been put on the machine. So, a large Windows update was then required to restore SP3 and all the other updates thought to be vital to my security. So, the conclusions are firstly that Windows XP and standby mode can be fragile under some rare circumstances. Secondly, curing tedious Windows XP start up problems can be mysterious. I speculate that my habit of putting the machine into standby mode may be quite risky when perilously short of diskspace. Probably at some point the standby mode program decides that the battery will expire soon and decides to write everything out to disk, but without checking properly that there is enough diskspace to save everything. Then, somehow or other, some special state for the graphics card of blankness (because the lid is closed) is saved, and the machine crashes. This state will be overwritten the next time someone logs on successfully. However, the next logon is effectively prohibited by the fact that the screen is blank.

Hence, users are forced into the rather tedious process of either wiping the machine and starting again. Or buying another machine and making another donation to Microsoft in the process for another, equally buggy, operating system.

But - if you have a blank screening Windows XP laptop, that almost boots but not quite, try typing in your credentials at what should be the log in screen. You may find that this clears the problem - and although it is not as satisfying as repairing the operating system, replacing graphics drivers, or buying a new machine, it may just get your machine going again.

I recently had occasion to add a disk to a virtual machine running Fedora Core 5. Without much system administration experience, and with an inclination emboldened by the fact that the machine was running on VMWare on a Windows XP machine, I found that the operation straightforward. Here are the necessary steps:

1. Add the hardware to the machine (virtually in my case, using the VMWare UI).

2. Use fdisk -l to establish the name of the hard drive that you have hooked up to the machine. The answer in my case was /dev/sda. The drive name information will be distinguished by the fact that the target drive is reported as not having a valid partition table.

3. Use fdisk /dev/sda to format the new disk. This involves typing 'm' to get a help listing, 'n' to add a new partition, 'p' to selected extended, '1' to specify the starting cylinder and 'w' to write the partition information to the hard disk.

4. Use mkfs.ext3 /dev/sda to write a file system to the disk.

5. All that then remains is to mount the disk so that it is accessible to the OS, first create a mount point mkdir /mnt/disk2 then issue a mount command to hook the disk to the mount.

mount -t ext3 /dev/sda /mnt/disk2

Then you can go on to work with your disk space hungry software.

Say you want to collect all your jpg graphics files from one computer and transfer them to another computer - what is the best way of going about this?

If you are well organized you can just transfer one directory from one machine to the other. However, frequently we spread files around on a machine (or our various programs do) and what is first needed is a search to find the important information - then collect it all in one place - and finally a transfer to its new location. Here is how you can go about collecting and saving all the jpg files on a machine.

Firstly - if you have a Windows machine - make sure that you have access to Cygwin so that you can use the general Linux command line options and commands that Cygwin makes available. If you you have a Linux or OS X machine - you are good to go. To achieve your objective you are going to need to:

1. Find the files

2. Clean up the names

3. Make the files list into a tar archive

4. Transfer the archive to the other machine (using ftp, rsync, etc.)

5. Extract the files (using tar xvzf filename.tgz)

To find the files - the find command is the appropriate tool:

find . -name "*.jpg" -print

Resulting in:

find . -name "*.jpg" -print ./My Music/AlbumArtSmall.jpg ./My Music/Folder.jpg ./My Pictures/DSC00388.jpg ./My Pictures/MyPicture.jpg ./My Pictures/UpgradeDialog.jpg

However, there are also files which have the extension .JPG and there might be files with .jpeg and any capitalization combination between these choices. So use the following find command:

find . \( -name "*.[jJ][pP][eE][gG]" -o -name "*.[jJ][pP][gG]" \) -print

This might seem complex, but the segments in square brackets (like [jJ]) enable the find command to select files with any possible capitalization pattern of jpg or jpeg as an extension - and print out the path of the file. The output of the command is now:

find . \( -name "*.[jJ][pP][eE][gG]" -o -name "*.[jJ][pP][gG]" \) -print ./a.jpEg ./My Music/AlbumArtSmall.jpg ./My Music/Folder.jpg ./My Pictures/DSC00388.jpg ./My Pictures/IMG_0175.JPG ./My Pictures/IMG_0176.JPG ./My Pictures/IMG_0180.JPG ./My Pictures/MyPicture.jpg ./My Pictures/New Folder/IMG_0391.JPG ./My Pictures/New Folder/IMG_0396.JPG ./My Pictures/New Folder/IMG_0398.JPG

So, finding the files is no longer a problem. However, they must be saved in a suitable archive - so that they can be transfered together. There are several possible commands (zip is one possible choice). But let's use the simple tar command. To glue the find output and the tar command together use the xargs command. This takes a list of, typically files as provided by find, and passes them into a command specified as its first argument. As xargs using spaces to delmit its own arguments - it is necessary to make sure that any spaces in filesname are appropriately escaped. Hence some sed is required. The short sed script is shown below - it has the effect of escaping any non-alaphabetic or numeric character in the filename. This is a good remedy for the various other characters which may be inserted in Windows file names that on occasion can confuse the Cygwin command line (like ampersands and dollars, for instance). The sed command says 'for characters which are not alpha numeric, replace them with the character itself with a backslash prepended to the character'. (There are 5 backslashes in the sed script - to escape the backslashes from the shell and to account for the backslash-1 nomenclature that sed uses to refer to the matched token (the non-alphanumeric character). So the command to output cleaned up filenames now looks like this:

find . \( -name "*.[jJ][pP][eE][gG]" -o -name "*.[jJ][pP][gG]" \) \ -print | sed -r "s/([^a-zA-Z0-9])/\\\\\1/g" \.\/a\.jpEg \.\/My\ Music\/AlbumArtSmall\.jpg \.\/My\ Music\/Folder\.jpg \.\/My\ Pictures\/DSC00388\.jpg \.\/My\ Pictures\/IMG\_0175\.JPG \.\/My\ Pictures\/IMG\_0176\.JPG \.\/My\ Pictures\/IMG\_0178\.JPG

As you can see from the output - the term 'cleaned up' is used loosely. However, the good news is that xargs can deal with this input easily. The command to hook up tar to this output is "xargs tar -rvf jpg.tar" which says from the stream of files supplied to xargs, provide them as arguments to tar, in append mode (-r) to add them to the tar archive (-f) jpg.tar. The (-v) option makes tar run in verbose mode so that you can see what it is doing. Here is the command now:

find . \( -name "*.[jJ][pP][eE][gG]" -o -name "*.[jJ][pP][gG]" \) \ -print | sed -r "s/([^a-zA-Z0-9])/\\\\\1/g" | xargs tar -rvf jpg.tar ./a.jpEg ./My Music/AlbumArtSmall.jpg ./My Music/Folder.jpg ./My Pictures/DSC00388.jpg ./My Pictures/IMG_0175.JPG ./My Pictures/IMG_0176.JPG ./My Pictures/IMG_0178.JPG ./My Pictures/IMG_0179.JPG ./My Pictures/IMG_0180.JPG ./My Pictures/MyPicture.jpg ./My Pictures/New Folder/IMG_0391.JPG

Now all the jpg files are safely contained within a single archive, jpg.tar - and this file can be transferred to another computer. The files can then be extracted using:

tar -xvf jpg.tar

And you are done...!

I recently needed to extract the important portion of one mp3 file. I also recently had the opposite problem of joining two related mp3 files. Here is how these operations can be achieved. Firstly, to extract an important portion of a given mp3 file you can use the following command:

ffmpeg -i input.mp3 -ss "0:35:26" -t 724 output.mp3

This starts the extraction at 35 minutes 26 seconds into the mp3 file called input.mp3. The extraction then runs for a duration of 724 seconds, and the output is stored in output.mp3.

To combine two mp3 files, you can simply concatenate the individual mp3 files:

cat file1.mp3 file2.mp3 > file3.mp3

When you have collected a set of mpg's from flv's from YouTube and similar places - how do you make your own DVD or make a DVD to give to a friend or relative?

Here we are talking about a normal DVD that you can play on a normal DVD player - not just on your computer - which can be convenient. Typically TV screens lead to nicer images than computer screens and they are designed to be viewed from many angles. It has to be said that the quality of the video is limited to the quality of the input flv (flash) file. Typically this is not as good as HD video - so be prepared for that.

To make a DVD proceed as follows:

ffmpeg -i normal.mpg -target ntsc-dvd dvdmpg.mpg

This converts your normal mpg into an mpg suitable for inclusion in a DVD. If you are in Europe or Japan, you will need to adjust the ntsc-dvd argument. The conversion takes a little while - so you will probably want to leave that running while you do other things. If you don't have an mpg input file - ffmpeg will determine the file type from the extension and generally it will do the 'right thing' - so proceed accordingly if your input files are mp4, m4v, avi, or flv (etc.)

The next step involves creating the directory structure and file contents of your DVD. The DVD format is quite fussy - and DVDs which do not have the correct format are not recognized by normal domestic players. So, use the 'dvdauthor' command to create your DVD layout. This is simple - you will get a simple continuously viewable DVD without menus etc. if you following these instructions. If you explore the documentation for dvdauthor your can create more sophisticated DVDs too.

So create yourself a little xml file which describes your DVD.

This is what the file (dvdauthor.xml) looks like:

<dvdauthor>

<vmgm />

<titleset>

<titles>

<pgc>

<vob file="temp1.mpg" chapters="0" />

<vob file="temp2.mpg" />

<vob file="temp3.mpg" />

<vob file="temp4.mpg" />

<vob file="temp5.mpg" />

<vob file="temp6.mpg" />

<vob file="temp7.mpg" />

<vob file="temp8.mpg" />

<pgc>

<titles>

<titleset>

<dvdauthor>

Then use the xml file above to create the directory structure for the DVD:

dvdauthor -o example -x dvdauthor.xml

Then use the mkisofs command to create the .iso to burn your DVD:

mkisofs -dvd-video -o example.iso example

You will be left with an iso image file of your DVD that you can then burn to DVD using your preferred DVD burning software...and that DVD will be a normal movie DVD.

In addition to RCS and subversion there is CVS. CVS can be more intimidating than RCS for casual use but it is more powerful. This article provides an illustration of simple CVS use - if you have not used CVS - and you have a large collection of files that you would like to put under source control, this article will get you started. I have kept the formatting compact - with comments beginning with '#' in the midst of the commands.

#First let's create an example directory #with two files in two directories mkdir example cd example mkdir a mkdir b touch a/a.txt date > b/b.txt #An ls -aR will show you the structure of the example directory ls -aR #Now create a local cvs repository cvs -d ~/cvsexample init #And import the current directory into it, calling the project #in the repository 'example' cvs -d ~/cvsexample import -m "" example example initial cd .. #Move the example directory out of the way mv example example-old mkdir tmp cd tmp cvs -d ~/cvsexample co example #The cvs controlled example directory is now in ~/tmp/example #From now on we work in this directory - let's do some example work cd example/ ls echo "Another line" >> b/b.txt cvs diff #You will be prompted for a commit message cvs commit cvs log b/b.txt echo "Yet another line" >> b/b.txt #You will again be prompted for a commit message cvs commit cvs diff -r1.1 -r1.2 b/b.txt #And so on....you edit, commit, diff and track your work using cvs #e.g. to see all the changes to b/b.txt cvs log b/b.txt

As you can see - this is straightforward - and keeping annotated versions of your work under CVS will soon become second nature. You can add more sophistication as your projects get larger and the number of team members working on the files increases - but for simple personal projects the commands in this article will pay dividends. An excellent, free, online resource of detailed CVS information is available in Open Source Development with CVS, 3rd Edition by Karl Fogel and Moshe Bar.

If you want to do software related work with a Windows machine - you need to have reliable access to Gnu related capabilities. Hence you need Cygwin. Here is how I configure Cygwin.

Many people must make use of Windows too for a variety of reasons. Windows is not good at automation. A popular solution to this problem - which is often better than maintaining two machines, dual booting or using virtual machines is Cygwin. Cygwin allows you to use familiar and convenient command line utilites on Windows. The The Cygwin site describes the installation procedure - the installer is a little non-standard - and I generally install everything so that I don't have to use the installer too often. (This uses a fair amount of diskspace, it has to be said).

A minor tribulation with the default installation will be that the bash shell which Cygwin provides - which by default is hosted in Windows' cmd.exe and has fairly awful and unuseful cut-and-paste functionlity - among other problems. Fortunately you can resolve this by locating your cygwin.bat file, generally in c:\cygwin\ and updating it to be as follows:

@echo off C: chdir C:\Cygwin\bin rxvt -sl 10000 -rv -fn 'Courier New-18' -e bash --login -i pause

(You will want to copy cygwin.bat to a backup copy before making your edits). This will have the effect of launching rxvt instead of cmd.exe when you fire up a Cygwin shell. The command line options provide for 10,000 lines of scrollability history, reverse video display (ie. a black background), a large fixed width font and the execution of bash in the resulting window, in full startup and interactive mode. Of course, as you now have Cygwin installed, you can obtain additional detail with man rxvt and man bash, and tune the options to match your needs. With rxvt you copy text by simply highlighting it, and you paste by either using the middle mouse button - or if you have touchpad laptop - using shift left-mouse button. The net result is a substantial improvement over cmd.exe.

It seems that once very few years I have to learn a new revision control system. Once I have learned some basic commmands, I then spend a little bit of time every week explaining how to use the system to others! Meanwhile people spend time trying to explain to me that this or that graphical interface on to of the revision control system is of vital importance. These graphical systems evolve with time, sometimes involve emacs and vim, and typical require ongoing care and attention. But the command line operations stay static...until eventually a decision is made to move to a new system, and the cycle repeats.

This scipt helps with that processes by educating me on how to control the revision control system from the command line, and sometimes it even helps to convey the knowledge about the very few commands necessary to work with a given system, before the need to look things up on the web strikes.

This script creates a subversion (svn) repository and conducts some simple operations on that repository. You can use this script to learn how subversion works, and how to work with subversion. The script will create a svn directory in your home directory, and trunk and branch (called 2xxx-01-01), directories. These can optionally be removed at the end of the script.

#!/bin/bash

REPODIR=$HOME/svn

REPO=file://$REPODIR

function svn_cmd() {

echo "Comment: " $1

if [ -z "$3" ]

then

echo "Executing: " $2

$2

else

echo $1 > tmp.txt

echo "Executing: " $2 --file tmp.txt

$2 --file tmp.txt

rm -f tmp.txt

fi

echo ""

}

if [ -d $REPODIR ]

then

echo "Repository alread exists, skipping creation"

echo "(delete $HOME/svn if you want to start from scratch)"

else

svn_cmd "Creating repository" "svnadmin create --fs-type fsfs $HOME/svn"

fi

# create, remove, and create the trunk

svn_cmd "What does the repository contain?" "svn ls $REPO"

svn_cmd "Creating trunk dir" "svn mkdir $REPO/trunk" cmt

svn_cmd "What does the repository contain?" "svn ls $REPO"

svn_cmd "Removing trunk dir" "svn rm $REPO/trunk" cmt

svn_cmd "What does the repository contain?" "svn ls $REPO"

svn_cmd "Creating trunk dir" "svn mkdir $REPO/trunk" cmt

svn_cmd "Checkout trunk" "svn co $REPO/trunk"

cd trunk

echo "line 1" > test.txt

# add a file, and a branch

svn_cmd "Adding test.txt" "svn add test.txt"

svn_cmd "Initial commit" "svn commit" cmt

svn_cmd "Creating branches dir" "svn mkdir $REPO/branches" cmt

svn_cmd "Create a branch" "svn copy $REPO/trunk $REPO/branches/2xxx-01-01" cmt

cd ..

# change the file on the branch

svn_cmd "Checkout branch" "svn co $REPO/branches/2xxx-01-01"

cd 2xxx-01-01

echo "line 2" >> test.txt

svn_cmd "Commit on the branch" "svn commit" cmt

svn_cmd "Checking branch history" "svn log -v test.txt"

cd ../trunk

# check the history

svn_cmd "Checking trunk history" "svn log -v test.txt"

svn_cmd "Diff trunk and branch" "svn diff $REPO/trunk $REPO/branches/2xxx-01-01

"

# remove the trunk and branches directories

svn_cmd "Removing trunk dir" "svn rm $REPO/trunk" cmt

svn_cmd "Removing branches dir" "svn rm $REPO/branches" cmt

svn_cmd "What does the repository contain?" "svn ls $REPO"

cd ..

echo "Enter y to: rm -rf svn trunk 2xxx-01-01"

read a

if [ "$a" = "y" ]; then

rm -rf $HOME/svn trunk 2xxx-01-01

fi

Do you want to run a spell check on your blog or web site? Perhaps not every day - but occasionally this can be helpful to remove basic errors. You can combine a wget download with a find command and aspell to get a list of your typos. Here's how:

#!/bin/sh

#check spelling on The Molecular Universe

wget --mirror -p --html-extension --convert-links \

-P ./ http://www.themolecularuniverse.com

find www.themolecularuniverse.com -name "*.html" |

while read file

do

echo $file

aspell --mode=html list < $file | sort -u

done

Put the commands above into a file, then execute the file at the command line, and you will run a spell check of www.themolecularuniverse.com - add an argument and/or some error checking to check your own sites.

I scanned an old paper recently - and was left with a huge PDF. The PDF was storing multiple images with color, and allowed for too much intensity variation for each pixel - hence its size. Text can be stored more efficiently than that!

So, here is a short script which extracts the pages of a PDF, reduces them to black and white, and reconstructs the PDF. This produced about a factor of ten reduction in size for me, and also improved the legibility as the text is now high contrast black on white.

Yes, the script is crude, and it contains a useless use of cat. Clean up and optimization are left as exercises for anyone interested ...

#!/bin/sh

i=0

while [ $i -lt 27 ]

do

i=`expr $i + 1`

echo $i

d=`echo $i | awk '{printf "%02d",$i}'`

echo $d

pdftk A=paper.pdf cat A$i output page$d.pdf

pdftoppm page$d.pdf -gray eh

cat eh-000001.pgm | pnmquant 2 | pgmtopgm | \

pamditherbw -threshold | pnmtops -nocenter -imagewidth=8.5 > tmp.ps

ps2pdf -dPDFSETTINGS=/ebook tmp.ps

mv tmp.pdf newpage$d.pdf

done

pdftk newpage*.pdf cat output newcombined.pdf

Occasionally I find myself watching a video, like a Houses of Parliament committee meeting, that really ought to be not hosted by a government. So occasionally, I take it upon myself to try to figure out how to obtain a local copy of such video.

However, it seems to me that there may be a quite extensive army of software engineers generating ever more complicated javascript wrappings of their videos in an attempt to thwart ther efforts of the innocents among us who simply want a local copy of what is being distributed anyway.

But, there is always 'ngrep', which lets you examine your currently open connections and communications, and see which flash and other file streams you have open, and then if appropriate grab them in a convenient manner for subsequent viewing. Here is what you have to do to use ngrep, assuming your are using an Ubuntu like Linux environment.

First get a copy of ngrep into your environment.

sudo apt-get install ngrep

Then fire up ngrep, play the video you are interested in, in your browser, and then sort through the ngrep output for the file that you are looking for, in this case streams that are in the '.flv' format.

sudo ngrep -d any '.flv' port 80 | tee ngrep.out

Here the output is being copied to ngrep.out as well as to the screen - so that you can edit through that output and find the complete URL of the obscure sources of the .flv file, which you can then fire up 'wget' with to copy the file to your hard drive.

Once this is done, you can then make use of your own local copy of that video stream...and ifappropriate make that video which was formerly hosted by some terrible government web site, in some terrible Silverlight-like format, available on YouTube, as a general service to the taxpayer.

|

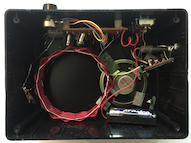

While the Hacker Sovereign II is a wonderful radio, I listen to the radio shown in the image on the left a great deal more. It has some practical attributes that are hard to beat.

This is a transistor radio designed by the great German electronics designer and author, Burkhard Kainka, as a simple project to be constructed in a video cassette box. I used a plastic enclosure from Radio Shack for my version, but the effect is the same...if not better...though I say so myself!

One of the key attributes of this design is the use of a single 1.5 volt AA battery as a power source. These batteries are common and inexpensive, unlike the unusual PP9 batteries in a Hacker. The power consumption is only about 12 milliamps (mA) when the speaker is in use and about 0.8 mA when an earphone is used. (I put a 1k resistor in series with a pair of earbuds for the earphone option). With that power consumption, in earphone mode, you can expect something in the region of 100 days (see * below) of continuous operation from the radio on a single AA battery, and if you use the radio for just a few hours a day, then you will be talking about several years of use.

I used a basket weave coil rather than a ferrite rod coil antenna - because I had such a coil to hand and it seemed to work fine.

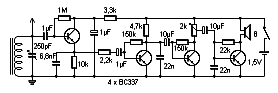

|

The circuit is shown on the left. As mentioned, a 1k ohm resistor in series with a stereo ear buds (conntected so that each ear bud is in the series circuit) form the earphone option (this is not shown in the diagram). There is a switch that controls the use of speaker or headphones, so that you can just leave the headphones plugged in, which is more convenient than constantly plugging and unplugging.

I also added a pair of white leds connected to a Joule Thief circuit, for lighting purposes, as shown in the photograph of the actual radio above. The Joule Thief generates a lot of radio frequency interference, so you cannot use the radio and the light at the same time, unfortunately. (I didn't think of this a priori!).

Now probably this bedroom furniture will not win too many style awards, at least not with present fashions. However, if you are interested in late night American paranormal phone-ins and ufology specials (and who isn't?) this is the radio to own.

* Here is how the ~100 days was computed. An alkaline AA battery can supply 2600 mA hours when used gently, as in this case. Hence we will have 2600/0.8 = 3250 hours of use from the radio. And 3250 hours is 3250/24 = 135 days.